We hid backdoors in binaries — Opus 4.6 found 49% of them

BinaryAudit benchmarks AI agents using Ghidra to find backdoors in compiled binaries of real open-source servers, proxies, and network infrastructure.

production-ready through

Independent evaluation and training for the AI agent ecosystem. Real-world complexity through simulation environments where agents face multi-hour tasks.

Talk to FounderLarge-scale RL datasets with tuned difficulty distributions. Cheat-proof reward functions. Teach skills scarce in public data (e.g. dependency hell, distributed system debugging).

Measure quality and uncover blind spots. Pick optimal models, tune prompts in a fast-changing world. Benchmark against competitors. Win deals and deliver on performance promises.

Independent verification of what actually works. Design processes based on real capabilities, not marketing hype. ROI-driven deployment decisions. Move from FOMO to measurable P&L impact.

Explore our research on AI agents, benchmarking, and evaluation

BinaryAudit benchmarks AI agents using Ghidra to find backdoors in compiled binaries of real open-source servers, proxies, and network infrastructure.

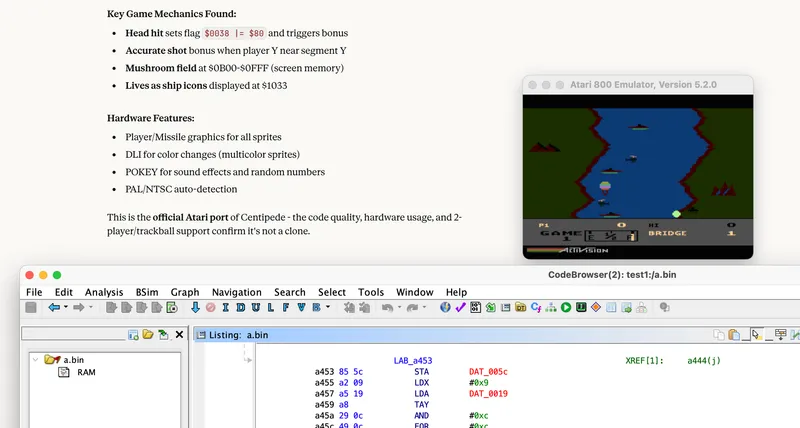

Connecting Claude to Ghidra via MCP to reverse engineer River Raid. A test of AI agents against 6502 assembly, memory mapping, and 80s game logic.

A lot of vendors pitch AI SRE. We tested 14 models across 11 programming languages; even the best ones struggle with instrumenting code with the leading open-source standard, OpenTelemetry.

The Quesma database gateway IP has been acquired by Hydrolix to ensure continued support.

Read the announcement.