I am excited to see the progress in AI. But not everyone shares this sentiment.

Outside of the tech bubble, AI is an invasive technology with no opt-out options. Here is a brief story of the contrast between my enthusiasm for using AI to visualize Black Mirror season ratings, and the stark reaction of the Internet.

Drawing charts

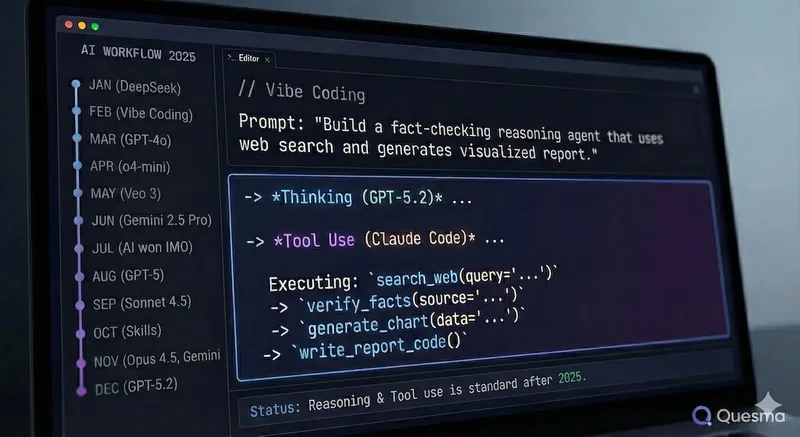

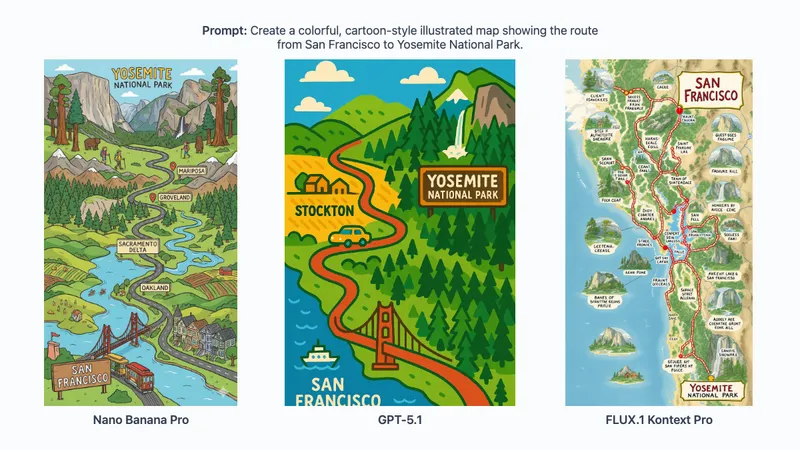

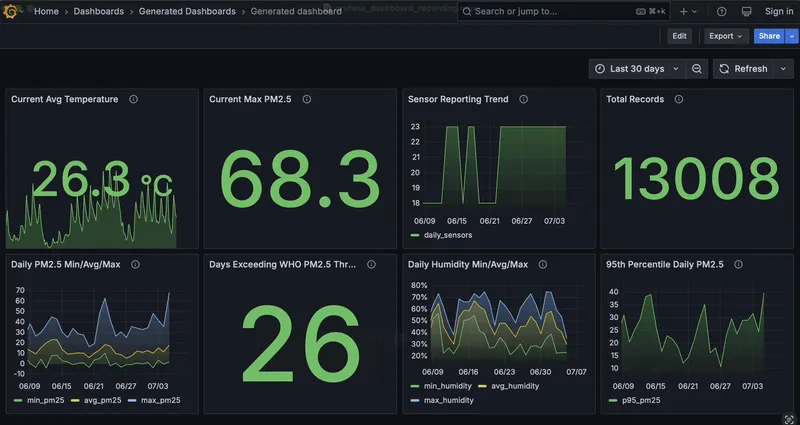

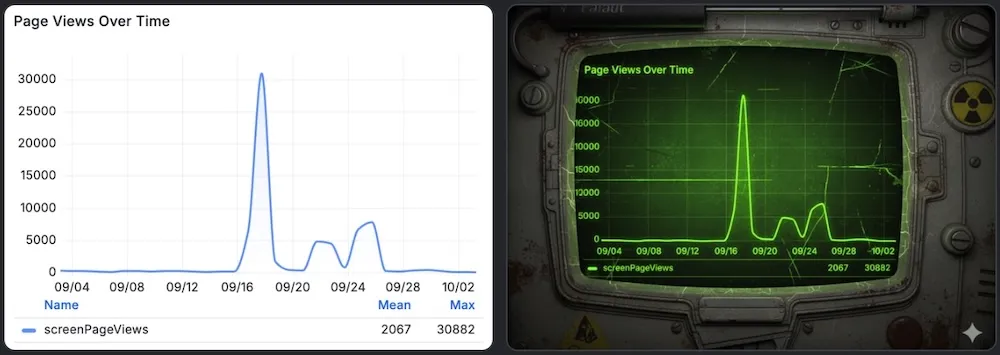

In early 2025, we explored creating and styling charts with AI. We also experimented if we can use image models to go beyond ggplot2 and Grafana visuals.

Prompt for Gemini 2.5 Flash Image (Nano Banana): “Turn this style to Fallout. Content needs to stay precisely the same.”

Styling charts with AI was unreliable. Oftentimes it took too much creative liberty and altered data. It was beautiful for showing cherry-picked results, yet useless for anything else. Now, the newest Nano Banana Pro opens new possibilities - including creating infographics. I bet it is much better at styling. But can it create a chart from scratch?

Black Mirror vibes

I love Black Mirror seasons 1-4 for their dark, intense form, aesthetics, and focus on substance. It has been quite some time since I watched it. I was curious if the latest season was any good. What better way to find out in 2025 than to use AI to tell me?

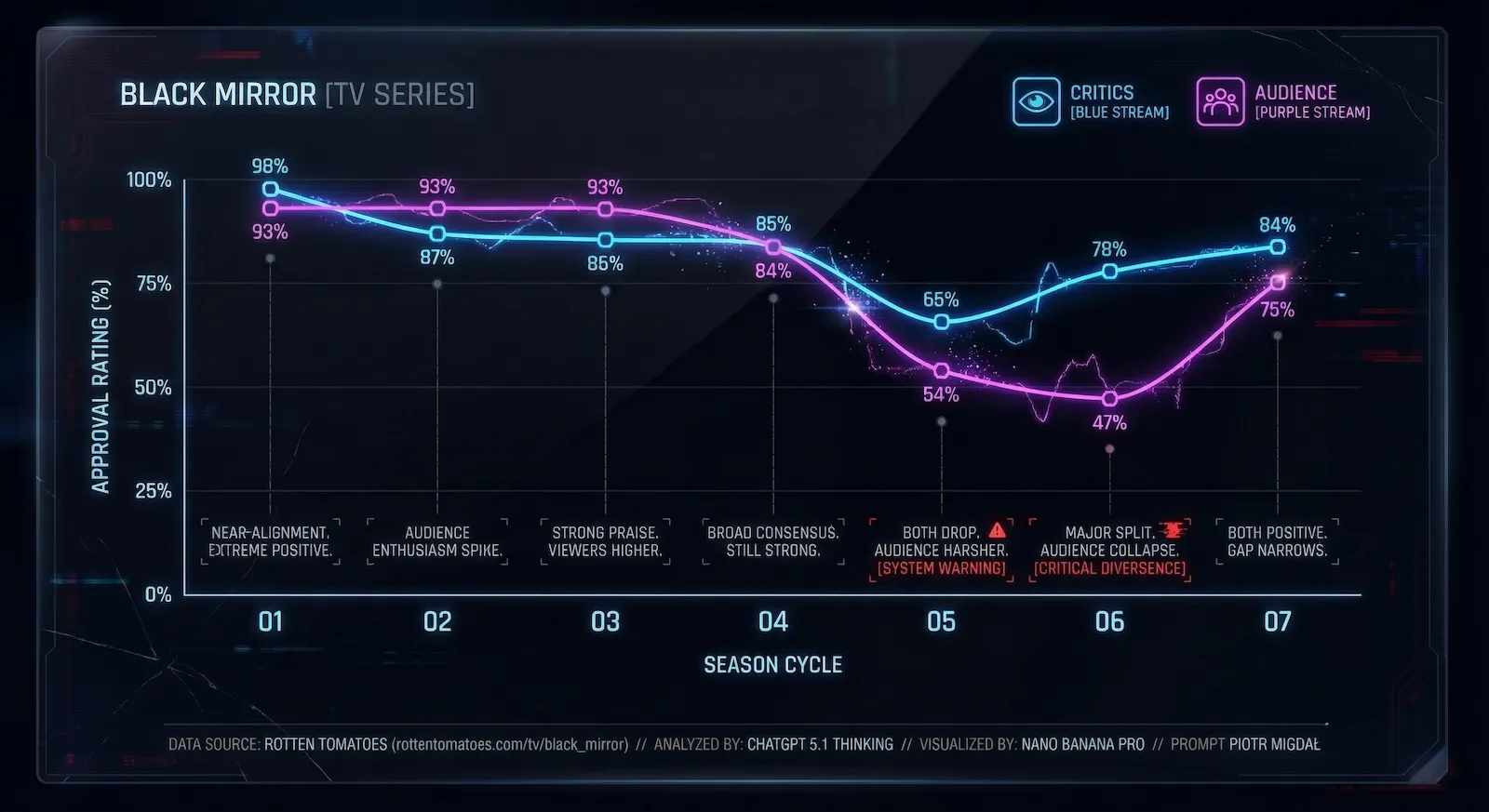

Numbers without context are meaningless, so I wanted to plot the ratings of all Black Mirror seasons. And for a twist, I wanted to create the chart in the show’s distinct style.

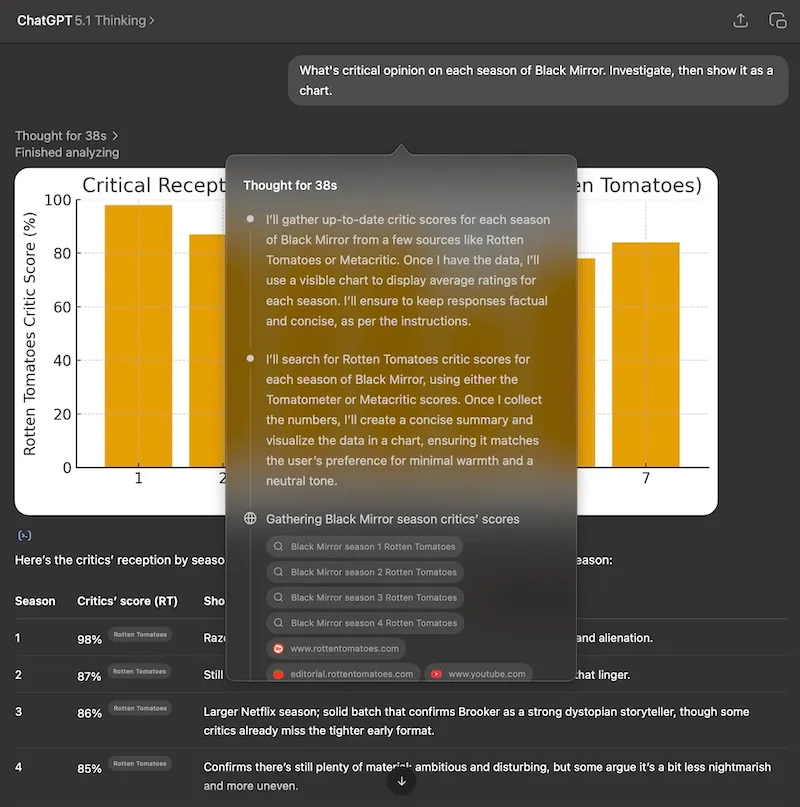

Using plain Nano Banana Pro within Google AI Studio was not enough. It didn’t use external search tools and was confined by its training data. It was certain the Season 7 does not exit - a common paternwith cut-off dates, sometimes resulting in embarrassing errors like Google AI not knowing that in Dec 2025 the next year is 2026.

So, I did it in two stages. First, I asked ChatGPT 5.1 Thinking for the critical reception of the seasons. It checked Rotten Tomatoes, my go-to source. I asked it to get both viewer and critic scores and show them as a table.

Two one-line prompts:

- What’s critical opinion on each season of Black Mirror. Investigate, then show it as a chart.

- Are viewers’ reviews similar?

Then, I used the output as a prompt in Nano Banana Pro.

The prompt was simple:

Create a chart - minimal, yet in aesthetics of Black Mirror - on its reception.

For comments - keep these low, not full sentences as in data below. Be faithful to numbers, though.

Data comes from Rotten Tomatoes,https://www.rottentomatoes.com/tv/black_mirror. Data analyzed by ChatGPT 5.1 Thinking, visualized by Nano Banana Pro.

Data:

Season | Critics | Audience | Relationship

1 | 98% | 93% | Both extremely positive, near-alignment.

2 | 87% | 93% | Critics very positive, audience even more enthusiastic.

3 | 86% | 93% | Same story: strong critical praise, viewers slightly higher.

4 | 85% | 84% | Almost identical; broad consensus it’s still strong.

5 | 66% | 54% | Both see a drop; audience significantly harsher than critics.

6 | 78% | 47% | Major split: critics think it’s a decent rebound, audience score collapses.

7 | 84% | 75% | Both positive; viewers still cooler than critics but gap narrows again.

Then a small edit:

Beautiful! A few small changes.

BLACK MIRROR [TV SERIES] - instead of the current title.

”Terminal ID” makes no sense. Instead - write: PROMPT PIOTR MIGDAŁ

Reception

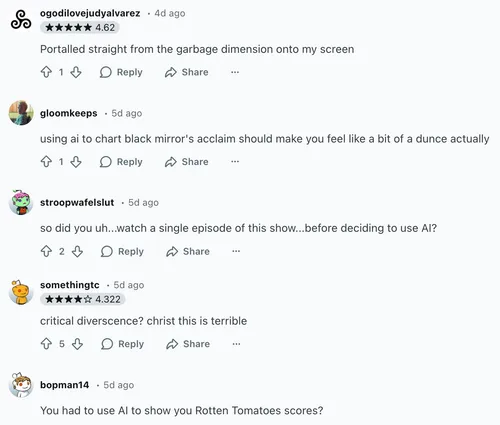

Excited, I posted the result online. First on my Facebook page, then on the r/blackmirror subreddit as Using AI to chart Black Mirror’s acclaim feels like living in the future, with a note:

Is the new season of Black Mirror any good?

I asked ChatGPT to tell me and Nano Banana Pro to turn it into a chart.

We live in the future. The Black Mirror future. But still—the future.

I expected some positive feedback, especially as the data showed the last season was much better than the previous few. To put it mildly, people were not too impressed:

My post got removed. No hard feelings there - posting on Reddit and being afraid of being banned is like going to the gym and being afraid of sweating. However, I was surprised by the unanimously harsh reception. Most likely, none of the commenters would be able to create such graphics themselves.

What was more interesting was the response on my social media. While some people were intrigued by the result or curious about the prompts, others were irritated I used AI at all.

One pop-culture blogger was displeased that I used AI instead of just clicking through Rotten Tomatoes. Sure, I could have, but getting data from 7 pages takes a bit of clicking. I asked her:

Is there a virtue in copy-and-paste?

I didn’t get a reply from her. Instead, someone else followed up:

Is there a virtue in getting dumb by using AI?

Sure, I am aware that there are many ways to become “dumb” by using AI, especially if it involves copy-and-pasting without learning. But I like using it to extend my knowledge, especially for things where I don’t know the search terms in advance - e.g., how I learned about phonesthemes.

Outside the bubble

When using any tool (AI included), we take responsibility. If AI confabulates and we share without checking, it is our fault. If we do not attribute sources or misattribute them, it is our fault.

But a significant portion of the population does not view AI merely as a tool. If something does not work, AI is called stupid, subjected to a level of scrutiny and nitpicking not applied to humans. If AI does work, it is seen as even worse.

For any “disruptive tech”, there is friction. And AI is disruptive on many levels:

- It is an “intellectual plastic” – so cheap to create that it floods social media with synthetic filler. It is harder to find quality human-made work.

- It allows creating fake content with ease. I need to double-check data - superficial polish is a weaker and weaker indicator of quality.

- It takes jobs - both ones people love and ones people need.

- It challenges some of our core identities as humans - intellectual, creative, and even spiritual.

Getting out of the bubble is sometimes refreshing. And sobering. It is crucial to know that the enthusiasm found on Hacker News, AI Tinkerers, or at your AI lab is not widespread.

We, who train AI models or create AI-assisted tools, need to realize that if we don’t want to face pitchforks, we need to make it easier to use AI for the benefit of humanity, and harder to misuse it. I don’t have a clear answer how.

For most people, AI feels like a Black Mirror episode they never signed up for. In their story, we are the villains.

Stay tuned for future posts and releases